Introduction

In the last blog post found here, we focused on the inner workings of Kubernetes ClusterIP service. Kubernetes ClusterIP exposes pods within a Kubernetes Cluster. In this blog post, we focus on exposing Pods to clients and services outside the Kubernetes Cluster focusing on two service types Kubernetes NodePort & Kubernetes Load Balancer services.

It is worth noting that the scenarios we will discuss in this post have been built on AWS however the same concepts & logic should follow for other environments.

Kubernetes NodePort

The simplest way to expose a service externally is by using the NodePort service whereby all the nodes listen by default on a TCP port in the range 30000-32767.

The flow works as follows:

The source being the client sends the request to an Elastic IP address (In this example we are assuming 1.1.1.1 & 1.1.1.2 corresponding to worker nodes 1 & 2).

The IGW will do a static NAT translating those Public addresses to their corresponding private addresses. We are assuming in the figure that traffic will hit Worker Node 1 thus the source remains to be the Client and the destination becomes eth0's address on Worker Node 1 (192.168.45.167).

Based on the NodePort service configuration, the port the node is listening to is 30730 thus when traffic hits worker node 1 on port 30730, this will be DNATTed to the nginx-node-port address (192.168.22.71).

For traffic to return in the same path, an SNAT also occurs changing the source address from the Client to Worker Node 1's eth0 address (192.168.45.167).

It is also worth noting that a NodePort service also creates a ClusterIP that can be accessible within the Kubernetes Cluster which is depicted as 10.100.199.193 in the figure below.

Name: nginx-node-port

Namespace: default

Labels: run=nginx-node-port

Annotations: <none>

Selector: run=nginx-node-port

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.100.199.193

IPs: 10.100.199.193

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 30730/TCP

Endpoints: 192.168.22.71:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

IPTables Inner Workings

In this section, we'll explore the IPTable inner workings that govern the functionality of a NodePort Service in a Kubernetes cluster.

PREROUTING Chain: Our journey begins with the PREROUTING chain, which directs us towards the KUBE-SERVICES chain.

KUBE-SERVICES: Within KUBE-SERVICES, the policy KUBE-SVC-3KJW6L7XGSFMVIPO is instantiated as a ClusterIP. This does not impact traffic from outside the cluster.

KUBE-NODEPORTS: Next, we reach KUBE-NODEPORTS, where the first rule, matches traffic to tcp with a destination port of 30730 redirecting us to KUBE-EXT-3KJW6L7XGSFMVIPO which in turn redirects us to KUBE-SVC-3KJW6L7XGSFMVIPO

KUBE-SVC-3KJW6L7XGSFMVIPO: This redirects us to KUBE-SEP-KS7GFULRFICP7QTF.

KUBE-SEP-KS7GFULRFICP7QTF: Here, a DNAT rule performs destination NAT to the Pod address 192.168.22.71.

For those interested in the SNAT for the reverse direction, you can find its details below following this pattern:

KUBE-POSTROUTING

AWS-SNAT-CHAIN-0

AWS-SNAT-CHAIN-1 SNAT takes place with an address of 192.168.26.112. Note that this IPTables output is extracted from Worker Node 2 and represents eth0's address.

[ec2-user@ip-192-168-26-112 ~]$ sudo iptables -t nat -vL

Chain PREROUTING (policy ACCEPT 36 packets, 2160 bytes)

pkts bytes target prot opt in out source destination

2208 147K KUBE-SERVICES all -- any any anywhere anywhere /* kubernetes service portals */

Chain KUBE-SERVICES (2 references)

pkts bytes target prot opt in out source destination

0 0 KUBE-SVC-3KJW6L7XGSFMVIPO tcp -- any any anywhere ip-10-100-199-193.ec2.internal /* default/nginx-node-port cluster IP */ tcp dpt:http

52 3120 KUBE-NODEPORTS all -- any any anywhere anywhere /* kubernetes service nodeports; NOTE: this must be the last rule in this chain */ ADDRTYPE match dst-type LOCAL

Chain KUBE-NODEPORTS (1 references)

pkts bytes target prot opt in out source destination

0 0 KUBE-EXT-3KJW6L7XGSFMVIPO tcp -- any any anywhere anywhere /* default/nginx-node-port */ tcp dpt:30730

Chain KUBE-EXT-3KJW6L7XGSFMVIPO (1 references)

pkts bytes target prot opt in out source destination

0 0 KUBE-MARK-MASQ all -- any any anywhere anywhere /* masquerade traffic for default/nginx-node-port external destinations */

0 0 KUBE-SVC-3KJW6L7XGSFMVIPO all -- any any anywhere anywhere

Chain KUBE-SVC-3KJW6L7XGSFMVIPO (2 references)

pkts bytes target prot opt in out source destination

0 0 KUBE-SEP-KS7GFULRFICP7QTF all -- any any anywhere anywhere /* default/nginx-node-port -> 192.168.22.71:80 */

Chain KUBE-SEP-KS7GFULRFICP7QTF (1 references)

pkts bytes target prot opt in out source destination

0 0 KUBE-MARK-MASQ all -- any any ip-192-168-22-71.ec2.internal anywhere /* default/nginx-node-port */

0 0 DNAT tcp -- any any anywhere anywhere /* default/nginx-node-port */ tcp to:192.168.22.71:80

Chain POSTROUTING (policy ACCEPT 246 packets, 16109 bytes)

pkts bytes target prot opt in out source destination

82418 5146K KUBE-POSTROUTING all -- any any anywhere anywhere /* kubernetes postrouting rules */

81338 5079K AWS-SNAT-CHAIN-0 all -- any any anywhere anywhere /* AWS SNAT CHAIN */

Chain AWS-SNAT-CHAIN-0 (1 references)

pkts bytes target prot opt in out source destination

59065 3589K AWS-SNAT-CHAIN-1 all -- any any anywhere !ip-192-168-0-0.ec2.internal/16 /* AWS SNAT CHAIN */

Chain AWS-SNAT-CHAIN-1 (1 references)

pkts bytes target prot opt in out source destination

51947 3162K SNAT all -- any !vlan+ anywhere anywhere /* AWS, SNAT */ ADDRTYPE match dst-type !LOCAL to:192.168.26.112 random-fully

Kubernetes Load Balancer Service

Load Balancer Provisioning

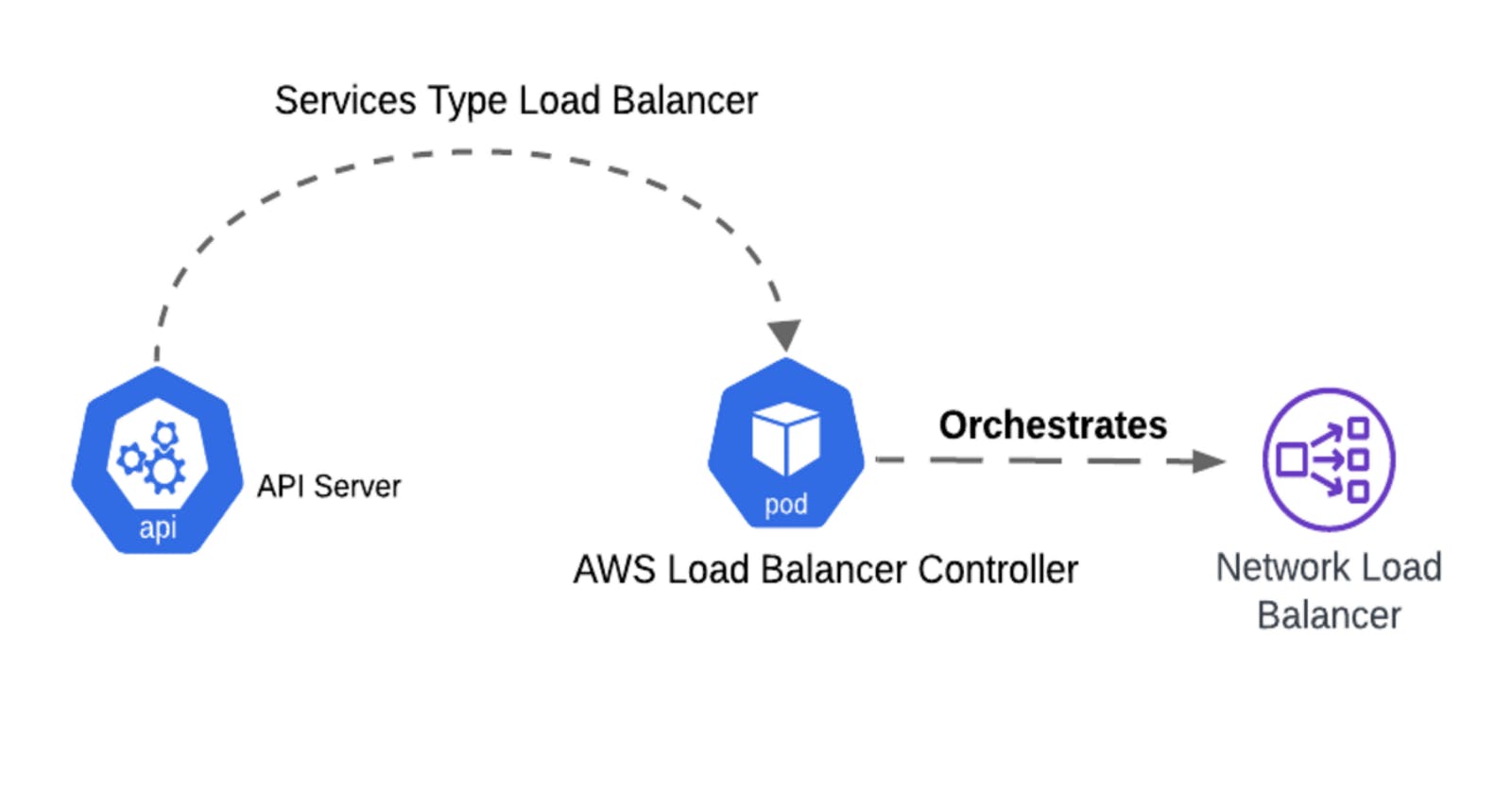

Load Balancer Service is another type of Kubernetes Service whereby we orchestrate Load Balancers that are outside the Kubernetes Cluster and have them serve as ingress points to a group of pods within our Kubernetes Cluster.

This generally requires a controller (Pod) that listens to all Kubernetes API Server activities that involve Kubernetes Load Balancer service. When the controller sees a service of type load balancer created, it creates an external Load Balancer that is mapped to that service. In AWS, this is depicted by the AWS Load Balancer Controller that also needs to have IAM permissions to create the Load Balancers within AWS.

The below are logs taken from the AWS Load Balancer controller pod and show that the controller is creating resources such as Load Balancers, Target Groups and Listeners which are again objects pertaining to Load Balancers in AWS. Please note that other Cloud Providers have similar functionality in terms of controllers that can orchestrate their Load Balancer offerings.

[cloudshell-user@ip-10-138-162-38 ~]$ kubectl logs -n kube-system aws-load-balancer-controller-5749768698-8jslx

{"level":"info","ts":"2024-01-23T19:24:55Z","msg":"setting service loadBalancerClass","service":"nginx1","loadBalancerClass":"service.k8s.aws/nlb"}

{"level":"info","ts":"2024-01-23T19:24:55Z","logger":"controllers.service","msg":"successfully built model","model":"{\"id\":\"default/nginx1\",\"resources\":{\"AWS::ElasticLoadBalancingV2::Listener\":{\"80\":{\"spec\":{\"loadBalancerARN\":{\"$ref\":\"#/resources/AWS::ElasticLoadBalancingV2::LoadBalancer/LoadBalancer/status/loadBalancerARN\"},\"port\":80,\"protocol\":\"TCP\",\"defaultActions\":[{\"type\":\"forward\",\"forwardConfig\":{\"targetGroups\":[{\"targetGroupARN\":{\"$ref\":\"#/resources/AWS::ElasticLoadBalancingV2::TargetGroup/default/nginx1:80/status/targetGroupARN\"}}]}}]}}},\"AWS::ElasticLoadBalancingV2::LoadBalancer\":{\"LoadBalancer\":{\"spec\":{\"name\":\"k8s-default-nginx1-36dbca87e3\",\"type\":\"network\",\"scheme\":\"internet-facing\",\"ipAddressType\":\"ipv4\",\"subnetMapping\":[{\"subnetID\":\"subnet-0bb01040718d4acb6\"},{\"subnetID\":\"subnet-0c11a935b51724fa2\"}]}}},\"AWS::ElasticLoadBalancingV2::TargetGroup\":{\"default/nginx1:80\":{\"spec\":{\"name\":\"k8s-default-nginx1-25a492f569\",\"targetType\":\"ip\",\"port\":80,\"protocol\":\"TCP\",\"ipAddressType\":\"ipv4\",\"healthCheckConfig\":{\"port\":\"traffic-port\",\"protocol\":\"TCP\",\"intervalSeconds\":10,\"timeoutSeconds\":10,\"healthyThresholdCount\":3,\"unhealthyThresholdCount\":3},\"targetGroupAttributes\":[{\"key\":\"proxy_protocol_v2.enabled\",\"value\":\"false\"}]}}},\"K8S::ElasticLoadBalancingV2::TargetGroupBinding\":{\"default/nginx1:80\":{\"spec\":{\"template\":{\"metadata\":{\"name\":\"k8s-default-nginx1-25a492f569\",\"namespace\":\"default\",\"creationTimestamp\":null},\"spec\":{\"targetGroupARN\":{\"$ref\":\"#/resources/AWS::ElasticLoadBalancingV2::TargetGroup/default/nginx1:80/status/targetGroupARN\"},\"targetType\":\"ip\",\"serviceRef\":{\"name\":\"nginx1\",\"port\":80},\"networking\":{\"ingress\":[{\"from\":[{\"ipBlock\":{\"cidr\":\"192.168.0.0/19\"}},{\"ipBlock\":{\"cidr\":\"192.168.32.0/19\"}}],\"ports\":[{\"protocol\":\"TCP\",\"port\":80}]}]},\"ipAddressType\":\"ipv4\"}}}}}}}"}

{"level":"info","ts":"2024-01-23T19:24:56Z","logger":"controllers.service","msg":"creating targetGroup","stackID":"default/nginx1","resourceID":"default/nginx1:80"}

{"level":"info","ts":"2024-01-23T19:24:56Z","logger":"controllers.service","msg":"created targetGroup","stackID":"default/nginx1","resourceID":"default/nginx1:80","arn":"arn:aws:elasticloadbalancing:us-east-1:87125367xxxx:targetgroup/k8s-default-nginx1-25a492f569/ad12b7f6eaf1ee7d"}

{"level":"info","ts":"2024-01-23T19:24:56Z","logger":"controllers.service","msg":"creating loadBalancer","stackID":"default/nginx1","resourceID":"LoadBalancer"}

{"level":"info","ts":"2024-01-23T19:24:57Z","logger":"controllers.service","msg":"created loadBalancer","stackID":"default/nginx1","resourceID":"LoadBalancer","arn":"arn:aws:elasticloadbalancing:us-east-1:87125367xxxx:loadbalancer/net/k8s-default-nginx1-36dbca87e3/7ff79bdbac47fb35"}

{"level":"info","ts":"2024-01-23T19:24:57Z","logger":"controllers.service","msg":"creating listener","stackID":"default/nginx1","resourceID":"80"}

{"level":"info","ts":"2024-01-23T19:24:57Z","logger":"controllers.service","msg":"created listener","stackID":"default/nginx1","resourceID":"80","arn":"arn:aws:elasticloadbalancing:us-east-1:87125367xxxx:listener/net/k8s-default-nginx1-36dbca87e3/7ff79bdbac47fb35/ead861bf3e4bcbb5"}

{"level":"info","ts":"2024-01-23T19:24:57Z","logger":"controllers.service","msg":"creating targetGroupBinding","stackID":"default/nginx1","resourceID":"default/nginx1:80"}

{"level":"info","ts":"2024-01-23T19:24:57Z","logger":"controllers.service","msg":"created targetGroupBinding","stackID":"default/nginx1","resourceID":"default/nginx1:80","targetGroupBinding":{"namespace":"default","name":"k8s-default-nginx1-25a492f569"}}

{"level":"info","ts":"2024-01-23T19:24:57Z","logger":"controllers.service","msg":"successfully deployed model","service":{"namespace":"default","name":"nginx1"}}

{"level":"info","ts":"2024-01-23T19:24:57Z","msg":"authorizing securityGroup ingress","securityGroupID":"sg-02cd536f30a36abed","permission":[{"FromPort":80,"IpProtocol":"tcp","IpRanges":[{"CidrIp":"192.168.0.0/19","Description":"elbv2.k8s.aws/targetGroupBinding=shared"}],"Ipv6Ranges":null,"PrefixListIds":null,"ToPort":80,"UserIdGroupPairs":null},{"FromPort":80,"IpProtocol":"tcp","IpRanges":[{"CidrIp":"192.168.32.0/19","Description":"elbv2.k8s.aws/targetGroupBinding=shared"}],"Ipv6Ranges":null,"PrefixListIds":null,"ToPort":80,"UserIdGroupPairs":null}]}

{"level":"info","ts":"2024-01-23T19:24:57Z","msg":"authorized securityGroup ingress","securityGroupID":"sg-02cd536f30a36abed"}

{"level":"info","ts":"2024-01-23T19:24:58Z","msg":"registering targets","arn":"arn:aws:elasticloadbalancing:us-east-1:87125367xxxx:targetgroup/k8s-default-nginx1-25a492f569/ad12b7f6eaf1ee7d","targets":[{"AvailabilityZone":null,"Id":"192.168.39.147","Port":80}]}

Instance Target

For Instance Target, we are configuring the Network Load Balancer to send the traffic to one of the two worker nodes which again have a NodePort configuration by which they listen to port 31729 in our example.

Thus, the follow works as follows:

The client resolves the FQDN name of the Network Load Balancer. Note that NLBs are generally implemented across Availability Zones for redundancy. As soon as we resolve the NLB's FQDN, the client will sends the packet towards one of the two NLBs Public address

The Internet Gateway at the edge of the VPC does a static 1:1 NAT changing the Public address of the chosen NLB to the private address 192.168.41.233 (Left NLB)

The left NLB has two targets which are the eth0 of Worker Nodes 1 & 2 that are both listening on TCP Port 31729. In our example, we assume the traffic goes to Worker Node 1. Thus till this point the source is the client IP and the destination is Worker Node 1's eth0 192.168.45.167.

Now that the packet has hit Worker Node#1, again similar to the behavior we have seen with NodePort, a DNAT will occur translating the destination tot he Pod address 192.168.22.71 and an SNAT will change the client address to 192.168.45.167.

The way we can tune the NLB's behavior is via Annotations set on the service, specifically the setting:

service.beta.kubernetes.io/aws-load-balance..

Name: nginx1

Namespace: default

Labels: run=nginx1

Annotations: service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: instance

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

service.beta.kubernetes.io/aws-load-balancer-type: external

Selector: run=nginx1

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.100.69.226

IPs: 10.100.69.226

LoadBalancer Ingress: k8s-default-nginx1-36dbca87e3-7ff79bdbac47fb35.elb.us-east-1.amazonaws.com

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 31729/TCP

Endpoints: 192.168.39.147:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

In the figures below, we can also see the AWS outputs showing that the targets are the two worker node instances and the NodePort configured is 31729.

The two images here show packet captures from both the Node & Pod perspective where the Node actually sees the client IP (Public Address) in this example and the Pod sees the Node IPs as the source 192.168.45.167 & 192.168.26.112.

IP Target

This is again another iteration of configuring the NLB whereby in this mode, the NLB directly sends the packet to the Pod instead of sending it to the Node. This is possible in our example because we are using the AWS VPC CNI whereby a Pod behaves just like a VM in the sense that it gets an IP address from the VPC range & thus can be reached directly from components within the VPC.

This is again possible by setting the below annotation.

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

Name: nginx1

Namespace: default

Labels: run=nginx1

Annotations: service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

service.beta.kubernetes.io/aws-load-balancer-type: external

Selector: run=nginx1

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.100.69.226

IPs: 10.100.69.226

LoadBalancer Ingress: k8s-default-nginx1-36dbca87e3-7ff79bdbac47fb35.elb.us-east-1.amazonaws.com

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 31729/TCP

Endpoints: 192.168.39.147:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

Similar to what we have seen above, this is the AWS output showing the target being the Pod Address 192.168.39.147.

Again, here we show the packet captures on both the Node and the Pod. Notice that even the Node sees the traffic as sourced from the LB and destined to the Pod IP thus there is no need for NodePort treatment at the Worker Nodes.

Drawbacks of Load Balancer Service

The main drawback of the Load Balancer Service is a 1:1 mapping between the Load Balancer and the service (group of pods) that you want to expose. This has multiple implications including increased costs, more management overhead, public addresses, and the need to secure all the Load Balancers with WAF, API Security, Anti-DDoS solutions. If one of these services goes uncovered or unprotected there could be security breaches leading to major losses.

The diagram below explains the problem where for each service, the AWS LB Controller will instantiate an NLB.

The below diagram emphasizes the same challenge whereby we can see our Kubernetes Cluster (here depicted as a single node) and all the different entry points that map to the different services.