Last week, a new tool called Kyverno captured my interest. I spent some time diving into their documentation to understand the benefits and use cases it addresses.

Kyverno is a policy engine designed specifically for Kubernetes. But what exactly is a policy engine? Simply put, it sets acceptable configuration standards for Kubernetes objects and resources. When these standards are deviated from, the engine can block the deployment, send alerts, or even mutate the configurations to comply with the policies. The main use cases for a policy engine like Kyverno revolve around enhancing security, ensuring compliance, automating tasks, and improving governance within Kubernetes clusters.

Basics of Admission Controllers

An admission controller is a component within Kubernetes that intercepts requests to the Kubernetes API server before the object is persisted, but after the request has been authenticated and authorized. As shown in the figure below, when a request to create a deployment is sent to the API server, it first undergoes authentication and authorization. Afterward, it is passed to the Admission Controller, depicted as a police officer in the diagram. In this scenario, the Admission Controller is configured to ensure that any deployment must have a minimum of two replicas to maintain high availability (HA).

Thus, the best way to think about an admission controller is as a police officer that examines requests sent to the API server to ensure they align with the organization's intent. It acts as a controller or watchdog, persistently ensuring conformance to organizational policies and intents.

Built in Admission Controllers

It's worth noting that Kubernetes includes several built-in admission controllers.. You can find a list of these controllers here.

Before delving further, it's important to differentiate between the two general types of admission controllers:

Validating Admission Controllers: These controllers simply allow or reject requests.

Mutating Admission Controllers: These controllers have the ability to modify objects related to the requests they receive.

Let's take the example of LimitRanger, an in-built admission controller or plugin. A LimitRange defines policies to govern resource allocations for pods or other applicable resources. More details can be found here. It's important to note that LimitRange functions as both a validating and a mutating admission controller.

To try it out, the first step is to add it to the list of admission controllers or plugins using the "--enable-admission-plugins" flag.

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 172.18.0.3:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=172.18.0.3

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction,LimitRanger

Now, let's define the LimitRange object where we configure:

Minimum and maximum CPUs for all pods within the cluster.

Default limits applied when a user doesn't explicitly configure the resources for a pod.

This configuration ensures that all pods adhere to the specified CPU limits, promoting efficient resource utilization across the cluster. Additionally, it provides a safety net by automatically assigning default limits when users do not specify resource constraints for their pods.

apiVersion: v1

kind: LimitRange

metadata:

name: cpu-resource-constraint

spec:

limits:

- default: # this section defines default limits

cpu: 500m

defaultRequest: # this section defines default requests

cpu: 500m

max: # max and min define the limit range

cpu: 600m

min:

cpu: 100m

type: Container

Below is an example of a Pod configuration that requests 700m of CPU resources, which exceeds the defined limit of 500m. When attempting to apply this configuration, you'll immediately encounter an error, showcasing the validating behavior of the admission controller.

apiVersion: v1

kind: Pod

metadata:

name: example-conflict-with-limitrange-cpu

spec:

containers:

- name: demo

image: registry.k8s.io/pause:2.0

resources:

requests:

cpu: 700m

The Pod "example-conflict-with-limitrange-cpu" is invalid: spec.containers[0].resources.requests: Invalid value: "700m": must be less than or equal to cpu limit of 500m

Now, let's attempt to create another pod without specifying any resource requests or limits.

kubectl run nginx --image nginx

We can observe that the default requests and limits have been automatically applied to the pod, courtesy of the admission controller. This exemplifies a mutating behavior, wherein the original request has been modified to include the necessary resource requests and limits.

kubectl get pods nginx -o yaml

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: nginx

resources:

limits:

cpu: 500m

requests:

cpu: 500m

External Admission Controllers

In the previous section, we delved into the inherent admission controllers within Kubernetes. However, these built-in controllers often lack the configurability or flexibility needed to accommodate diverse organizational requirements.

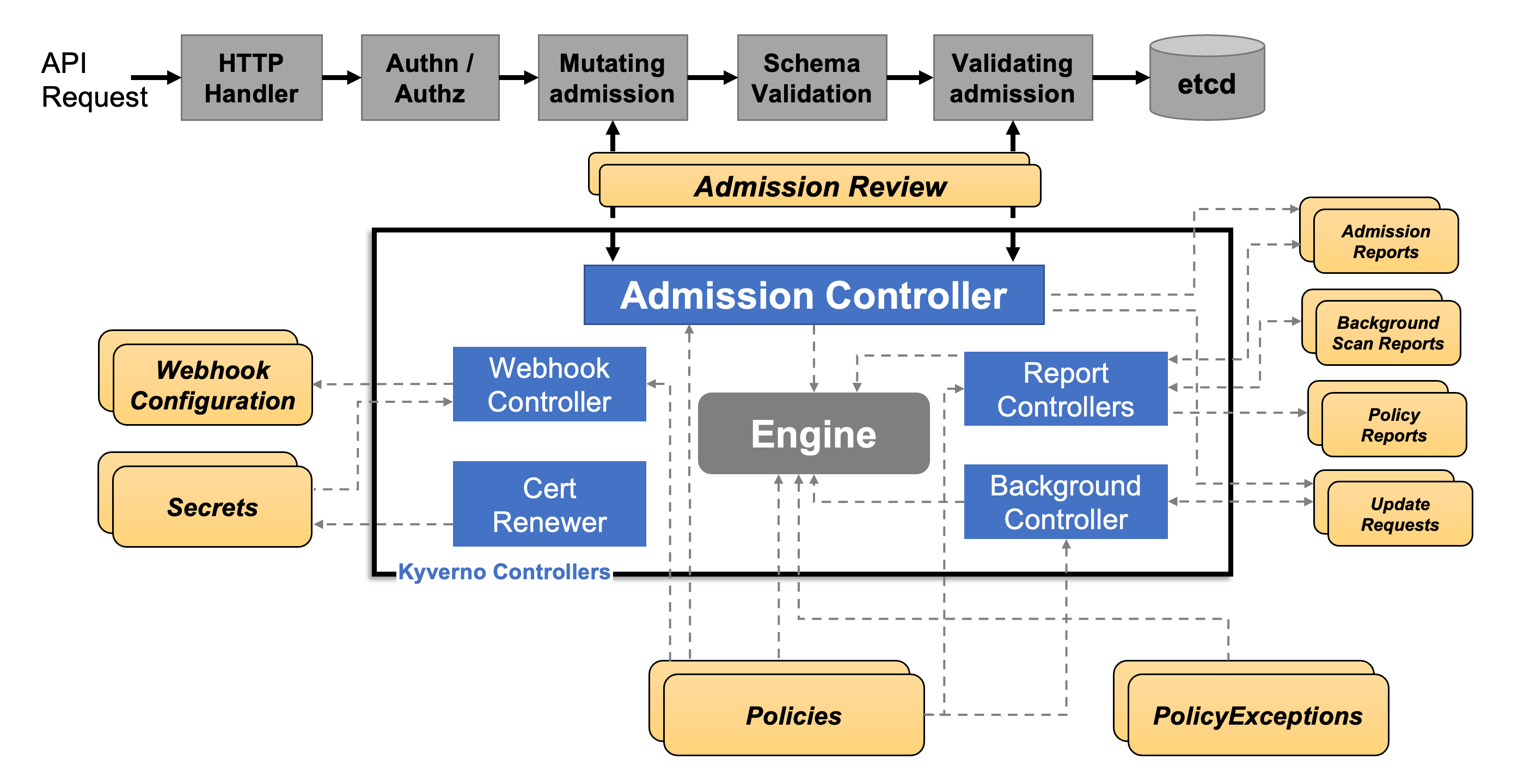

Below is a diagram (Source: Kyverno documentation) illustrating the stages of an API request to a Kubernetes API Server, highlighting the crucial steps involving Mutating and Validating admissions.

Here's a concise overview of the mentioned components:

Webhook Controller: This controller is responsible for managing webhooks, which play a pivotal role in programming the Kubernetes API server to forward API requests to Kyverno. Webhooks are essential building blocks that enable seamless integration between Kubernetes and Kyverno, facilitating the enforcement of policies and configurations.

Policy Engine: Kyverno's policy engine serves as the cornerstone component tasked with evaluating and enforcing policies on Kubernetes resources. It empowers administrators to define policies as Kubernetes resources within Kyverno, enabling them to manage, validate, and mutate other Kubernetes resources effectively. By processing these policies, the policy engine ensures that the cluster complies with desired configurations and standards, fostering consistency and adherence to organizational policies.

Cert Renewer: This component focuses on managing TLS (Transport Layer Security) communications between the Kubernetes API Server and the Kyverno webhook service. By handling TLS certificates, the Cert Renewer ensures secure transmission of data between these components, safeguarding the integrity and confidentiality of communications.

The ValidatingWebhookConfiguration plays a crucial role in configuring the Kubernetes API server to route requests to Kyverno. Here's how it works:

Webhooks Section: This section defines where to redirect matching API requests. It typically references a Kubernetes Service and specifies a particular path to send the requests for validation.

Rules Section: The rules section allows you to specify the resources and operations that you want to send to the admission controller for validation. This granularity enables precise control over which requests are subject to validation.

Namespace Selector: This field enables you to focus admission control on specific namespaces where stricter governance is required or where you want to apply policies gradually. It provides flexibility in applying policies across different parts of the cluster.

Failure Policy: This field determines how the Kubernetes API server responds when it receives a negative admission response from the admission controller. The options are "Fail" and "Ignore". "Fail" immediately rejects the intended operation upon receiving a negative admission response, ensuring strict enforcement of policies. On the other hand, "Ignore" provides more leniency by allowing the operation to proceed despite policy violations. This can be useful when you want to identify and understand gaps before fully enforcing policies.

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

name: kyverno-validating-webhook

webhooks:

- name: validate.kyverno.svc

clientConfig:

service:

name: kyverno-svc

namespace: kyverno

path: "/validate"

caBundle: <ca-bundle> # Base64 encoded CA certificate

rules:

- operations: ["CREATE", "UPDATE"]

apiGroups: ["*"]

apiVersions: ["*"]

resources: ["*"]

failurePolicy: Fail

matchPolicy: Equivalent

namespaceSelector: {}

objectSelector: {}

sideEffects: None

timeoutSeconds: 10

admissionReviewVersions: ["v1"]

Sample Policies

This example policy ensures that each configured pod must have the "app" label.

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: check-label-app

spec:

validationFailureAction: Audit

background: false

validationFailureActionOverrides:

- action: Enforce # Action to apply

namespaces: # List of affected namespaces

- default

rules:

- name: check-label-app

match:

any:

- resources:

kinds:

- Pod

validate:

message: "The label `app` is required."

pattern:

metadata:

labels:

app: "?*"

Explanation:

ClusterPolicy: as the name suggests is a policy that applies Cluster wide vs policy which is scoped to the namespace.

background: When set to true, this field ensures that the policy is applied retroactively to existing resources upon creation or update. This means that the policy will be evaluated against resources that were created before the policy was implemented.

validationFailureAction: This field determines the default action to take when a validation rule fails. In this case, it is set to "audit", meaning that failed validations will be recorded, but resource creation will continue.

validationFailureActionOverrides: This section allows you to override the default validation failure action for specific namespaces. In the example, validation failures in the default namespace will be enforced, overriding the default action set in validationFailureAction.

rules: This section defines the rules of the policy. In this example, there is one rule named "check-app-label" that applies to Pods. The rule specifies that Pods must have an 'app' label.

Attempting to create an nginx pod within the blue namespace succeeds with a warning as per the policy.

kubectl -n blue run nginx --image=nginx

Warning: policy check-label-app.check-label-app: validation error: The label `app` is required. rule check-label-app failed at path /metadata/labels/app/

pod/nginx created

Attempting the same operation within the default namespace is denied again as per policy.

k.eljamali@LLJXN1XJ9X admission % kubectl run nginx --image=nginx

Error from server: admission webhook "validate.kyverno.svc-fail" denied the request:

resource Pod/default/nginx was blocked due to the following policies

check-label-app:

check-label-app: 'validation error: The label `app` is required. rule check-label-app

failed at path /metadata/labels/app/'

Here's the example policy enforcing a minimum level of high availability for deployments:

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: validate

spec:

validationFailureAction: Enforce

rules:

- name: validate-replica-count

match:

any:

- resources:

kinds:

- Deployment

validate:

message: "Replica count for a Deployment must be greater than or equal to 2."

pattern:

spec:

replicas: ">=2"

Cilium Policies

After reviewing several examples, I've considered implementing Kyverno for admission control of Cilium Network Policies. For that matter I found an interesting repository here that helped me get started.

After making little modifications to various examples on GitHub, I crafted a policy aimed at whitelisting specific namespaces where Cilium policies can be configured. Any attempts to create Cilium policies in namespaces not included in the whitelist will be denied. I think this is pretty useful to ensure no mishaps can happen as part of Network Policy configuration and helps with locking things down one namespace at a time.

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: limit-ciliumnetworkpolicy-scope

spec:

validationFailureAction: enforce

background: false

rules:

- name: limit-scope

match:

any:

- resources:

kinds:

- CiliumNetworkPolicy

validate:

message: "CiliumNetworkPolicy can only be applied to specific namespaces"

anyPattern:

- metadata:

namespace: blue

- metadata:

namespace: default

I attempt to use the below Network Policy.

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "isolate-ns1"

namespace: green

spec:

endpointSelector:

matchLabels:

{}

ingress:

- fromEndpoints:

- matchLabels:

{}

As expected, the request is blocked by the admission controller.

kubectl apply -f mypolicy.yaml

Error from server: error when creating "mypolicy.yaml": admission webhook "validate.kyverno.svc-fail" denied the request:

resource CiliumNetworkPolicy/green/isolate-ns1 was blocked due to the following policies

limit-ciliumnetworkpolicy-scope:

limit-scope: 'validation error: CiliumNetworkPolicy can only be applied to specific

namespaces. rule limit-scope[0] failed at path /metadata/namespace/ rule limit-scope[1]

failed at path /metadata/namespace/'

The example below provides more insight on Kyverno's capabilities whereby we leverage a ClusterPolicy whose objective is to ensure that pods to be created need to have the label "app.kubernetes.io/name".

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: require-labels

annotations:

policies.kyverno.io/title: Require Labels

policies.kyverno.io/category: Best Practices

policies.kyverno.io/minversion: 1.6.0

policies.kyverno.io/severity: medium

policies.kyverno.io/subject: Pod, Label

policies.kyverno.io/description: >-

Define and use labels that identify semantic attributes of your application or Deployment.

A common set of labels allows tools to work collaboratively, describing objects in a common manner that

all tools can understand. The recommended labels describe applications in a way that can be

queried. This policy validates that the label `app.kubernetes.io/name` is specified with some value.

spec:

validationFailureAction: Enforce

background: true

rules:

- name: check-for-labels

match:

any:

- resources:

kinds:

- Pod

validate:

message: "The label `app.kubernetes.io/name` is required."

pattern:

metadata:

labels:

app.kubernetes.io/name: "?*"

Now, I try to create a deployment (not a Pod) & interestingly enough the admission controller reacts in the right manner not allowing the deployment to happen.

kubectl create deployment kubernetes-bootcamp --image=gcr.io/google-samples/kubernetes-bootcamp:v1

error: failed to create deployment: admission webhook "validate.kyverno.svc-fail" denied the request:

resource Deployment/default/kubernetes-bootcamp was blocked due to the following policies

require-labels:

autogen-check-for-labels: 'validation error: The label `app.kubernetes.io/name`

is required. rule autogen-check-for-labels failed at path /spec/template/metadata/labels/app.kubernetes.io/name/'

Conclusion

Kyverno and more generally admission controllers can help ensure better security, compliance and governance of Kubernetes Clusters. On top of this, there is a good number of readily available policies making it easier to consume. I even tried asking ChatGPT to generate a Kyverno policy:

please craft a kyverno policy that would allow cilium network policies to only touch pods that have environment: dev as not to disrupt production

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: restrict-cilium-policy

spec:

background: true

validationFailureAction: enforce

rules:

- name: restrict-cilium-network-policy

match:

resources:

kinds:

- CiliumNetworkPolicy

validate:

message: "Cilium Network Policies can only target pods with the label 'environment: dev'."

pattern:

spec:

endpointSelector:

matchLabels:

environment: dev

It might be worthwhile if you are getting started to deploy it to have the validationFailureAction as Audit as this would provide context on how objects being created deviate from the norm and have the background set to true as this will ensure that your existing resources are scanned in terms of adherence to organizational policies.ValidateActionOverride will be useful to enforce policies on namespaces one by one to ensure a smooth adoption.

Finally, a good one to mention is Policy Reports that provide insights into the compliance status of resources in the Kubernetes cluster. Though I have noticed that some resources don't show up in the reports, it is still very useful when background is set to true as this will give an indication of the compliance state of all resources within Kubernetes.

kubectl get policyreports.wgpolicyk8s.io -A

NAMESPACE NAME KIND NAME PASS FAIL WARN ERROR SKIP AGE

cilium-monitoring 6228a955-195b-4795-83ee-5c1a662bf532 Deployment prometheus 0 1 0 0 0 8d

cilium-monitoring 81242176-f6ac-4331-8be3-bdedccc32f67 Pod grafana-6f4755f98c-4cxzx 0 1 0 0 0 8d

cilium-monitoring bbe6c1f9-a91f-4628-b524-bf04c2603c0a ReplicaSet grafana-6f4755f98c 0 1 0 0 0 8d

cilium-monitoring c70e65cc-800a-4986-90ab-c52078189f29 ReplicaSet prometheus-67fdcf4796 0 1 0 0 0 8d

cilium-monitoring d7006776-21e2-4b86-a684-d6cf173f2f2e Pod prometheus-67fdcf4796-gj4kb 0 1 0 0 0 8d

cilium-monitoring dd37f6a5-0bd7-43f8-a7d5-337f089df95f Deployment grafana 0 1 0 0 0 8d

kube-system 0064fbf9-80ce-4517-934e-5b34d98e88c5 DaemonSet cilium 0 1 0 0 0 8d

kube-system 0be0cbb5-25b0-448e-922c-074e510d269c Pod cilium-zx692 0 1 0 0 0 8d

kube-system 0d9c47b0-2b55-4752-ae1f-1d22ab63e9c9 Pod cilium-operator-5d64788c99-g2f48 0 1 0 0 0 8d

kube-system 14ae51f5-ba2f-4942-aa35-d93e94d2d76f Pod kube-scheduler-kind-control-plane 0 1 0 0 0 8d

kube-system 23a370a8-ef0e-4d1c-aa19-ffab703b5f0d DaemonSet kube-proxy 0 1 0 0 0 8d

kube-system 35e9706f-7a88-4932-a78c-37745a397abe Pod coredns-76f75df574-xvxns 0 1 0 0 0 8d

kube-system 501429e0-4f00-4f30-9cc8-9eb633d9a0e5 ReplicaSet coredns-76f75df574 0 1 0 0 0 8d

kube-system 6911c7d8-4128-470d-89ef-6fc969e62b05 Pod kube-controller-manager-kind-control-plane 0 1 0 0 0 8d

kube-system 69a7f09e-a753-4dd9-8fe2-e5eb70e3691d Pod kube-proxy-jvllh 0 1 0 0 0 8d

kube-system 8c6c30a5-1ef6-4bd1-9978-381b0aeca611 Deployment cilium-operator 0 1 0 0 0 8d

kube-system 9cd0150a-34fd-49df-837b-d2f19ea575dd ReplicaSet cilium-operator-5d64788c99 0 1 0 0 0 8d

kube-system 9ecef8f0-c9b1-4989-9fa6-15b07f8de581 Deployment coredns 0 1 0 0 0 8d

kube-system a8482fb9-10bc-4819-8230-17a776d1079f Pod cilium-kfrnt 0 1 0 0 0 8d

kube-system be23c6c2-8ad5-4a86-adc1-de4180dc8d81 Pod kube-proxy-g4gnt 0 1 0 0 0 8d

kube-system cc4021e0-2360-403b-b013-fad70807f04b Pod coredns-76f75df574-7zdjj 0 1 0 0 0 8d

kube-system dba5f38a-e8e5-46d0-9e4a-ec269ae9ddb4 Pod cilium-bztgh 0 1 0 0 0 8d

kube-system e0146bde-f48c-4663-997f-019e24402bcd Pod cilium-operator-5d64788c99-hdlsz 0 1 0 0 0 8d

kube-system eadc9e7d-90dd-42c1-9dbe-62b3822d668a Pod kube-proxy-fn567 0 1 0 0 0 8d

kube-system f3d97e60-2a0f-4985-9644-a98e98541103 Pod etcd-kind-control-plane 0 1 0 0 0 8d

kube-system ff45c31d-ca5d-43c5-90da-bb0f113b03b3 Pod kube-proxy-6jfqw 0 1 0 0 0 8d

kyverno 0840b270-f6a2-499c-b1d7-a37a716ef440 Pod kyverno-background-controller-84b5ff7875-fkd2x 0 1 0 0 0 8d

kyverno 13f74dc4-4e27-4a78-b34c-cc9ae3e7b57c CronJob kyverno-cleanup-admission-reports 0 1 0 0 0 8d

kyverno 27bf13f4-2bc8-4dae-8e0c-2a29d57438c0 Deployment kyverno-reports-controller 0 1 0 0 0 8d

kyverno 29bcbffc-d253-4d8e-97d6-98043bf9600b CronJob kyverno-cleanup-cluster-admission-reports 0 1 0 0 0 8d

kyverno 532c51d8-843e-42ae-82d2-d5a8f6f9b62e Pod kyverno-cleanup-controller-574fc8c6b6-kpbbg 0 1 0 0 0 8d

kyverno 6fea3745-3b96-46c9-9c62-59cc22f75722 Deployment kyverno-admission-controller 0 1 0 0 0 8d

kyverno 80e5c9d7-9677-4a71-a4c3-89370afd665e ReplicaSet kyverno-cleanup-controller-574fc8c6b6 0 1 0 0 0 8d

kyverno 94048b9d-8a52-4f70-bbc4-cb814b8ee671 Pod kyverno-reports-controller-5557c85bb4-8jpm6 0 1 0 0 0 8d

kyverno a0948eaa-9332-488c-a7a9-cb9c3ee4b045 ReplicaSet kyverno-admission-controller-6797d4bd6b 0 1 0 0 0 8d

kyverno ab1c5601-072a-4782-b08a-d9c2e9ae3d92 Deployment kyverno-cleanup-controller 0 1 0 0 0 8d

kyverno ead76fa0-bfec-4385-9a00-4b26293c069b Pod kyverno-admission-controller-6797d4bd6b-l2kwf 0 1 0 0 0 8d

kyverno f47b4093-46fd-4eb7-abe3-0e431a98d23a ReplicaSet kyverno-background-controller-84b5ff7875 0 1 0 0 0 8d

local-path-storage 13e4514a-18e1-484d-aa39-c591f34c4d56 Pod local-path-provisioner-7577fdbbfb-6hb2v 0 1 0 0 0 8d

local-path-storage 45119b4b-a214-4d6c-b06a-7c8a2c4af402 ReplicaSet local-path-provisioner-7577fdbbfb 0 1 0 0 0 8d

local-path-storage adc91343-2e69-4059-aebb-1e4a50f80a2b Deployment local-path-provisioner 0 1 0 0 0 8d