Brief Introduction

Kubernetes Services serve as an abstraction layer for one or more pods operating behind them. If you're thinking Load Balancers or proxies, you are on the right path.

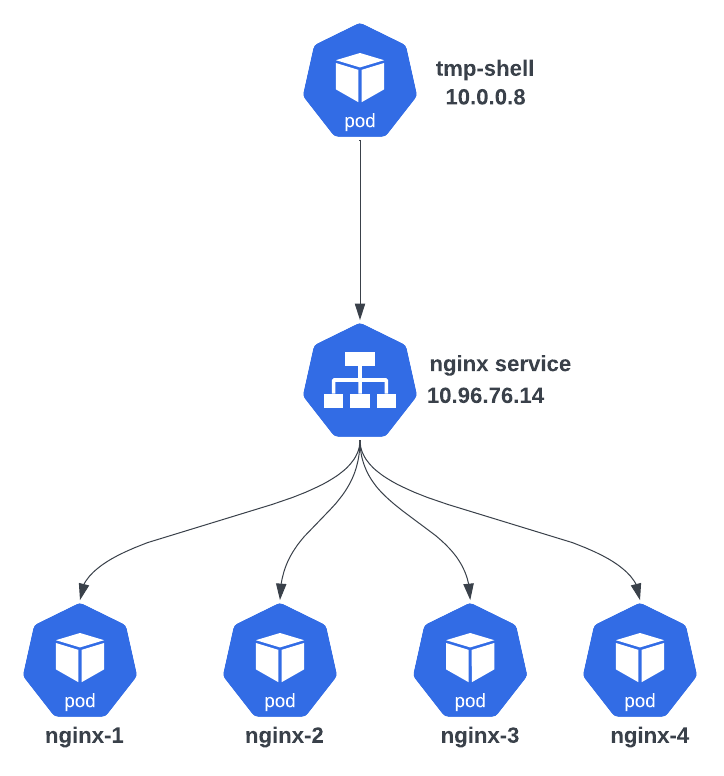

In essence, pods are transient resources within Kubernetes, typically instantiated through Deployments. These Deployments leverage controllers to ensure a consistent minimum number of pods is always active. Referring to the below figure, there's a Pod named "tmp-shell" that needs to communicate with an nginx pod. "Tmp-shell" doesn't care about the specific nginx pod it connects to. Even if the actual pods in the nginx service change, "tmp-shell" isn't bothered by these changes. Kubernetes Services provide this abstraction. In our example, the nginx service hides the details of individual nginx pods from "tmp-shell." So, "tmp-shell" connects to the nginx service, and the service then directs the request to one of the available endpoints or targets.

One lingering question is: How does "tmp-shell" discover the service? The service has both an IP address and a DNS name. Consequently, "tmp-shell" initiates a DNS request to CoreDNS, the Kubernetes DNS Service, seeking to resolve the DNS name associated with the nginx service. Upon receiving a response from CoreDNS, "tmp-shell" can establish a connection to the nginx service using its IP address.

There are multiple service types in Kubernetes, those that expose Pods internally within the Kubernetes Cluster and those that expose Pods externally (Outside the Kubernetes Cluster). In this blog post, we will mainly focus on ClusterIP service that exposes pods within the Kubernetes cluster just like the example we have been discussing between tmp-shell and nginx where all of these pods reside within the same Kubernetes cluster.

Where do Services Live?

Services are implemented through iptable rules on each node to manage traffic effectively. Whenever a service is created or updated, these iptable rules are adjusted to ensure that traffic is correctly directed to the designated pods.

ClusterIP services are instantiated on all nodes, meaning iptable rules are programmed across the entire cluster. Think of it like an Anycast Address, extending its presence across all nodes in the cluster. In the figure below, the nginx service is created with 3 supporting pods nginx-1, nginx-2 and nginx-3. All nodes have an instantiation of the service depicted as IPtables configuration, and the service shares a common virtual address, exemplified as 10.96.76.14 in our case.

When Client-1 on Worker Node-1 sends a request to the nginx service, the service must decide which of the four backing pods to route the request to. With four pods, each one handles 25% of the traffic. Importantly, even on nodes without nginx pods, the service is still instantiated, as observed in the case of Worker Node 3.

Traffic Flow

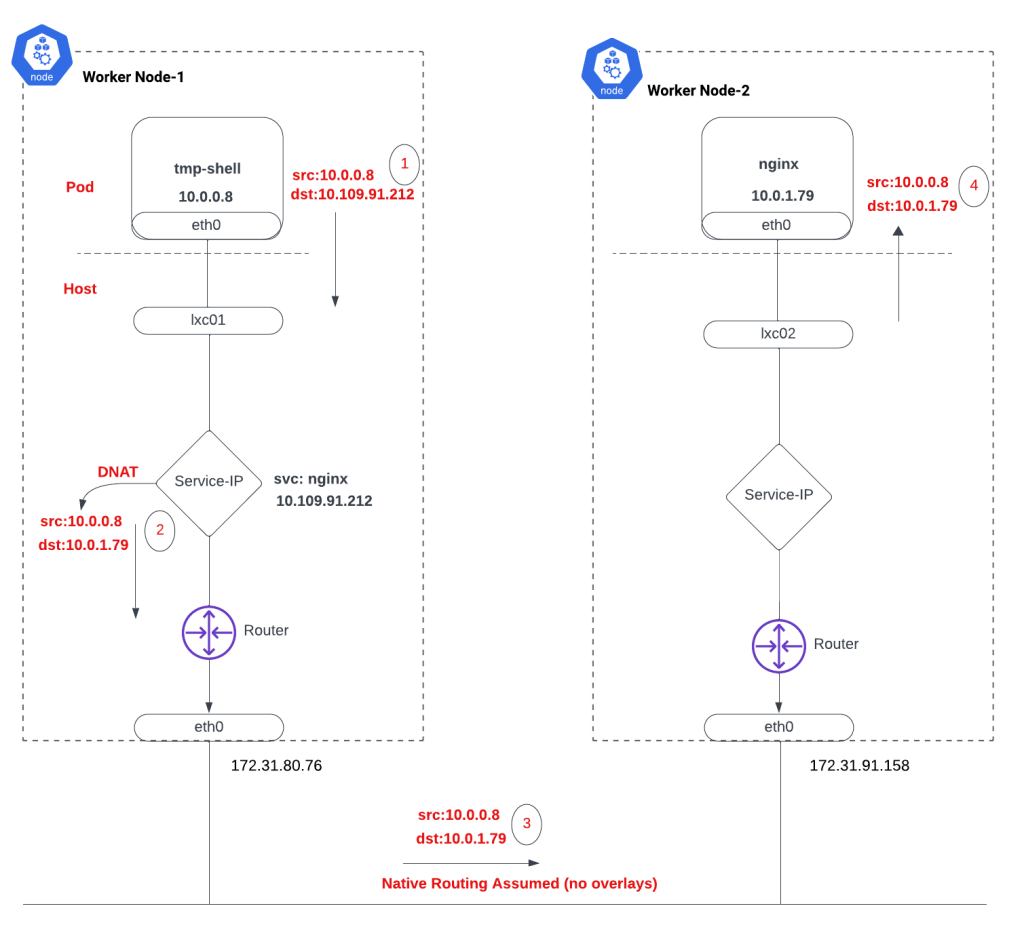

In this section, we delve into the traffic flow between the "tmp-shell" pod and the nginx pods, highlighting key points:

Virtual Ethernet Pairs: "tmp-shell" connects to the host through virtual Ethernet pairs, where one end is labeled as eth0 on the pod, and the other end is an interface named lxc01 for "tmp-shell." This pattern applies consistently across all pods in the cluster. You can think about it like a virtual wire connecting the Pod to the host.

Service Name Resolution: To communicate with the nginx service, "tmp-shell" needs to resolve the IP address mapped to the service name. The naming convention for services is

<servicename>.<namespace>.svc.cluster.localassuming the default domain name. In our example, using the default namespace, the nginx service's FQDN is "nginx.default.svc.cluster.local." Subsequently, a packet is sent from "tmp-shell" (10.0.0.8) to the nginx service address (10.96.76.14) upon receiving the DNS response.Destination NAT (DNAT): iptables perform a Destination NAT, converting the nginx service address (10.96.76.14) to the nginx pod address. This is demonstrated in the example with a single endpoint or backing pod for the nginx service, though real-world scenarios may involve multiple pods.

Pod-to-Pod Routing Patterns: There are two high-level patterns for pod-to-pod routing across nodes:

Native Routing: Pod addresses traverse the network between nodes unchanged. In this scenario, the underlying network must be aware of Pod addresses, necessitating proper routing. However, the absence of NAT offers better visibility into pod-to-pod communication.

Overlays: Regardless of the tunneling mechanism, packets leave the node with an outer envelope indicating source/destination worker nodes. The underlying network views these packets as originating from one worker node and destined for another. Beyond this point, the nginx pod receives the packet with the source address as "tmp-shell" (10.0.0.8).

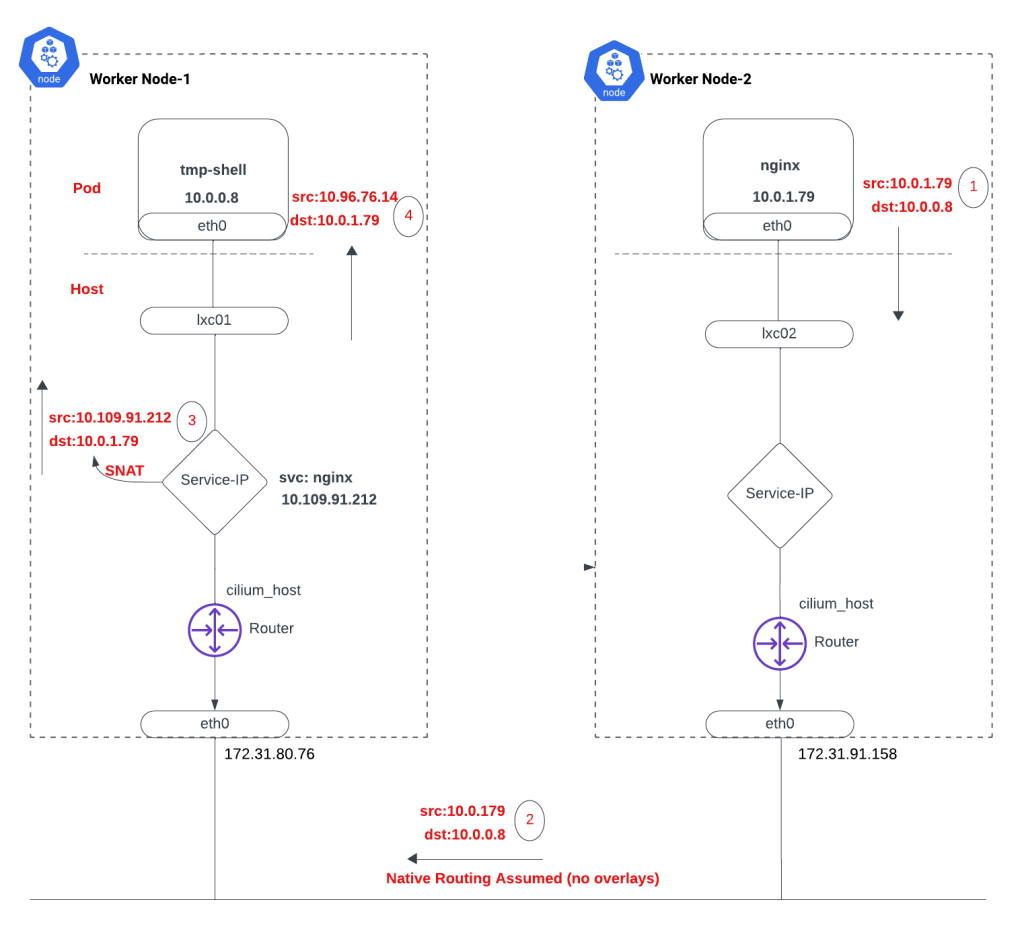

Response Handling: Upon reaching Worker Node 1, the packet's source is 10.0.1.79, and the destination is 10.0.0.8. However, "tmp-shell" won't accept this packet as the original packet it sent was from itself (10.0.0.8) to the nginx service (10.96.76.14). iptables intervenes again, performing Source NAT (SNAT) of 10.0.1.79 (nginx address) to the nginx service (10.96.76.14), aligning with the original packet flow.

This detailed explanation clarifies the steps in how "tmp-shell" communicates with the nginx pods throughout the Kubernetes cluster.

Here you can find the packet captures that align with the explanation on how it works.

The iptables rules operate as follows:

PREROUTING Chain:

- Initiates the process, directing traffic to the

KUBE-SERVICESchain.

- Initiates the process, directing traffic to the

KUBE-SERVICES Chain:

Checks if the destination matches the Cluster IP (10.109.91.212) of the nginx service.

If matched, redirects the traffic to the

KUBE-SVC-2CMXP7HKUVJN7L6Mchain.

KUBE-SVC-2CMXP7HKUVJN7L6M Chain:

- Directs traffic to the

KUBE-SEP-XZRG5L6J3WL7H422chain, as indicated by the comment.

- Directs traffic to the

KUBE-SEP-XZRG5L6J3WL7H422 Chain:

Utilizes DNAT (Destination Network Address Translation) in its final rule.

This rule modifies the destination of the traffic, rerouting it to the nginx pod.

These iptables rules collectively ensure that traffic directed to the specified nginx service IP undergoes the necessary transformations, ultimately reaching the appropriate nginx pod.

Quick Note on IPTables:

KUBE-SERVICES: This is a container for all Kubernetes Services configured on the cluster.

KUBE-SVC-*: This is an entry for a particular service. e.g. nginx service

KUBE-SEP-*: In IPTables, when you see KUBE-SEP-* this is an entry for a particular endpoint

Thus in our example, you can see one KUBE-SVC-* and one KUBE-SEP-* as we have a single service (nginx) and a single endpoint (10.0.1.79)

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A KUBE-SERVICES -d 10.109.91.212/32 -p tcp -m comment --comment "default/nginx cluster IP" -m tcp --dport 80 -j KUBE-SVC-2CMXP7HKUVJN7L6M

-A KUBE-SVC-2CMXP7HKUVJN7L6M -m comment --comment "default/nginx -> 10.0.1.79:80" -j KUBE-SEP-XZRG5L6J3WL7H422

-A KUBE-SEP-XZRG5L6J3WL7H422 -p tcp -m comment --comment "default/nginx" -m tcp -j DNAT --to-destination 10.0.1.79:80

The below IPtables example, show what happens when we add another endpoint to back the nginx service thus we have two endpoints. You can now see that we still have one KUBE-SVC-* (one service) and 2 KUBE-SEP-* (2 Endpoints) and the service sends to the particular endpoint with a probability of 0.5 (50%) thus equally distributing the load across both endpoints.

Endpoint 1: 10.0.1.79

Endpoint 2: 10.0.0.224

-A KUBE-SVC-2CMXP7HKUVJN7L6M -m comment --comment "default/nginx -> 10.0.0.224:80" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-HZXSYA2C4E7MQSH3

-A KUBE-SVC-2CMXP7HKUVJN7L6M -m comment --comment "default/nginx -> 10.0.1.79:80" -j KUBE-SEP-XZRG5L6J3WL7H422

-A KUBE-SEP-XZRG5L6J3WL7H422 -p tcp -m comment --comment "default/nginx" -m tcp -j DNAT --to-destination 10.0.1.79:80

-A KUBE-SEP-HZXSYA2C4E7MQSH3 -p tcp -m comment --comment "default/nginx" -m tcp -j DNAT --to-destination 10.0.0.224:80

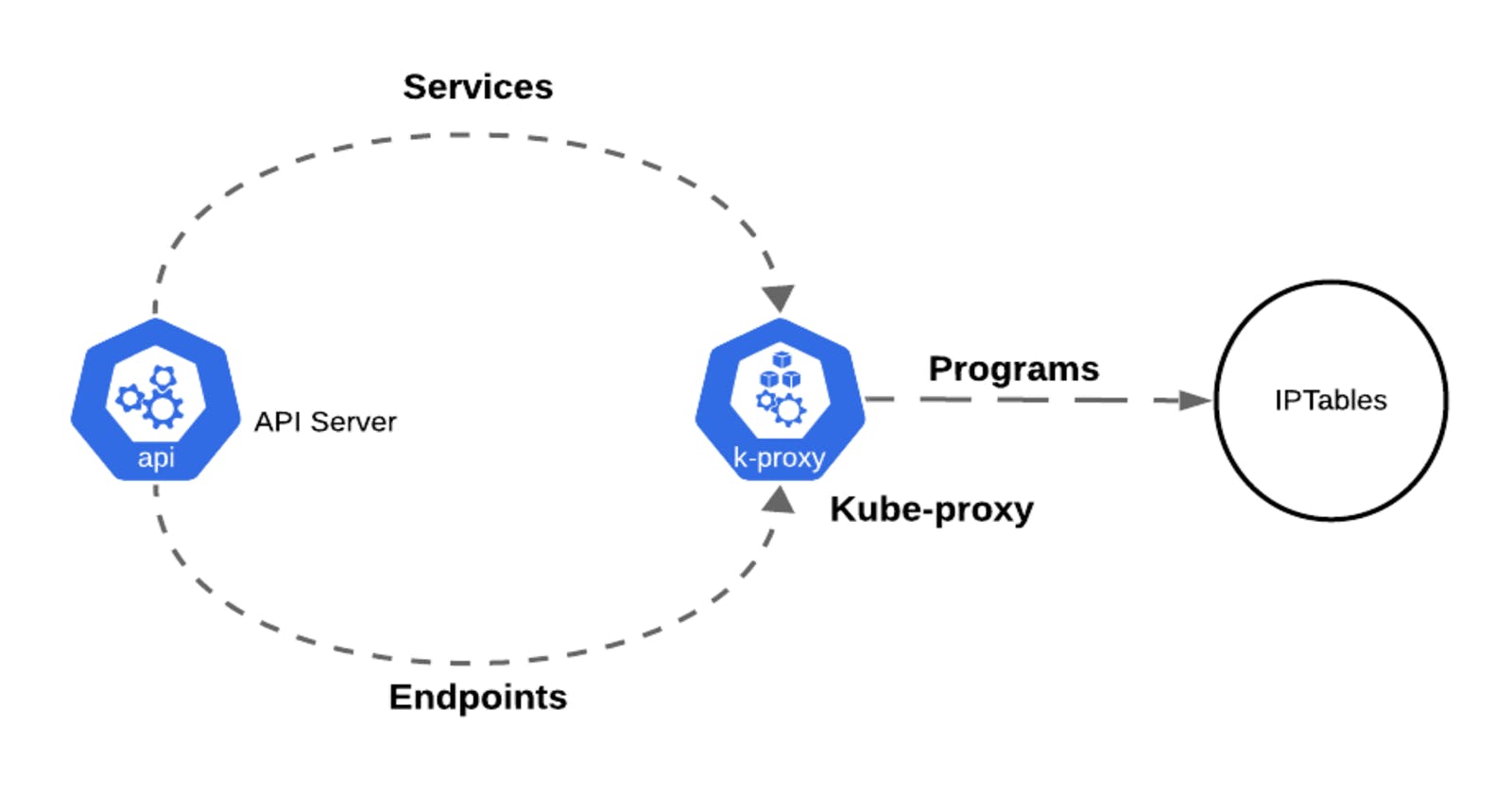

How are these IPTables Programmed?

Whenever any changes happen for the Kubernetes Services or Endpoints, API Server shares these changes with Kube-proxy which in turn programs the IPTables accordingly.